3.1 An overview of FLaVor FL

This page shows PI how to modify the custom code that fits the interface of AI-Lab federated learning framework by the assistance of FLaVor and build it into a docker image. Here we invoke FLaVor FL example code for PyTorch which can be deduced to other frameworks as well. The full examples of the changing from custom code to FLaVor-styled code are presented in the following sections in this chapter.

Prerequisites

Assume our file structure is like

|

CoPI |---dataset | |---img1.jpg | |---img2.jpg | |---labels.csv |---dataset.zip # from the compressing of dataset

PI |---main.py # custom training / validation code |---requirements.txt # the packages that main.py needed and Flavor |---Dockerfile # script for building an image that contains main.py and has packages in requirements.txt installed |

, where the black parts are exists.

Modify main.py

| Before | After |

|

load dataset from local |

import flavor # step 1 load dataset from os.environ["INPUT_PATH"] # step 4 SetEvent("TrainInitDone") # step 5 epochs=-1 while 1: # step 2 epochs+=1 WaitEvent("TrainStarted") # step 5 load model from aggregator by os.environ["GLOBAL_MODEL_PATH"] # step 4 Prepare an output dictionary # step 3 SaveInfoJson(dictionary) # step 5 training 1 epoch # step 2 save model to os.environ["LOCAL_MODEL_PATH"] # step 4 SetEvent("TrainFinished") # step 5 SetEvent("ProcessFinished") # step 5 (Optional in most cases) |

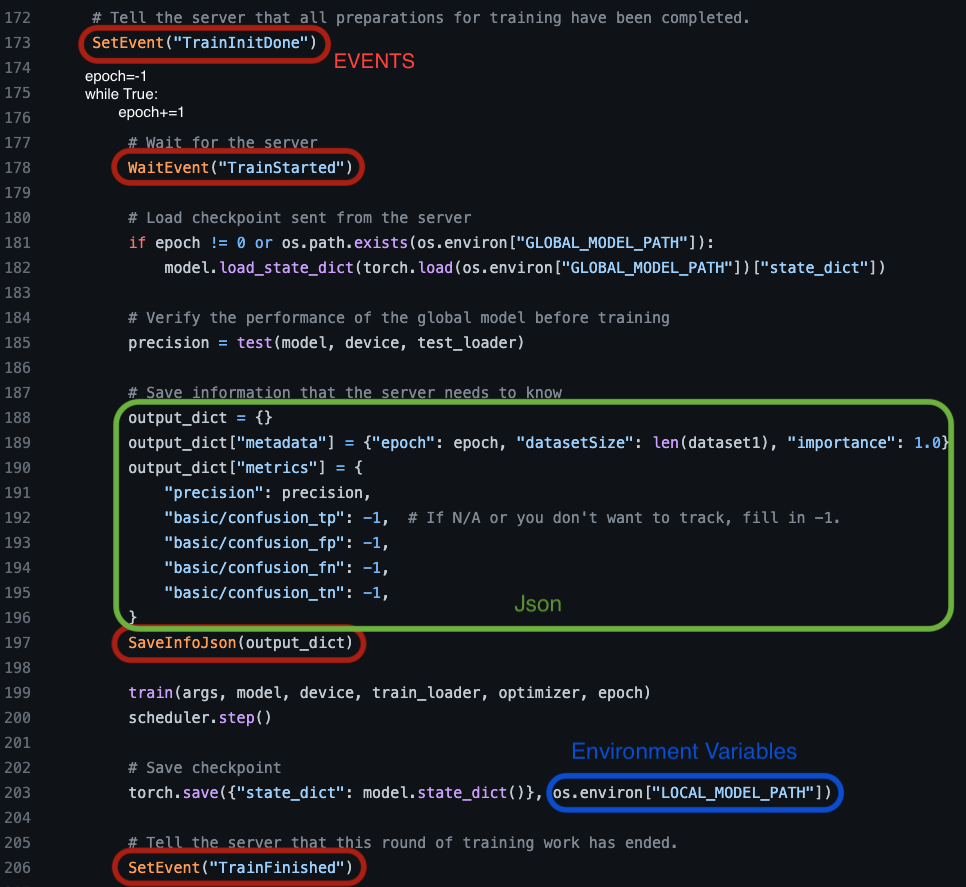

We will step-by-step elaborate each modifications in detail. The below image is a part of an example. Note that the modified code cannot be executed locally since FLaVor and other packages should be installed in docker.

Step 1 - Import FLaVor modules

Add the following code at the beginning of main.py.

| from flavor.cook.utils import SaveInfoJson, SetEvent, WaitEvent import os, pickle # pickle will be used if your framework is NOT Pytorch |

Step 2 - Make the training process in a for loop

If you use a framework such as Pytorch, the training process has been in a for loop already. However, some framework pack the training process into a function such as Tensorflow Keras, i.e. model.fit(...). Then you should split each epoch into a for loop as the below example.

| Before | After |

| model.fit(xTrain, yTrain, epochs=epochs) | epochs=-1 while 1: epochs+=1 ... model.fit(xTrain, yTrain, epochs=1) |

Step 3 - (OUTPUT INTERFACE) Prepare a dictionary

The dictionary should be in the specified format as the Green Box in the above example image and it should be placed "in the training for loop but before the training process". The detail spec is at following:

- metadata: dict # Must

- epoch: int

- datasetSize: int # The number of training data.

- importance: float # Epoch weight. Set equal weights 1.0 in default

- metrics: dict # You can add any other keys (str) with values (float).

- precision: float

- basic/confusion_tp: float

- basic/confusion_fp: float

- basic/confusion_fn: float

- basic/confusion_tn: float

Be careful that numpy.float and numpy.int cannot be saved into a json file (NOT json serializable). Please Convert them by int() or float() in advance.

Note that if any of the value is possible to be NAN, please use math.isnan(...) or numpy.isnan(...) to avoid storing NAN to json.

Step 4 - (INPUT INTERFACE) Set environment variables

For the absolute path in the above table, replace all of them with environment variables as the Blue Box in the above example image.

| Code environment variables | Types | Explanation | Aggregator Dashboard Setting Plans Inputs |

| INPUT_PATH | Directory | Training dataset | Datasets location |

| LOCAL_MODEL_PATH | Directory | Saved weights after training | AI model weights location |

| GLOBAL_MODEL_PATH | Directory | Pre-trained weights and load per round | AI model weights location |

| (Optional) OUTPUT_PATH | Directory | Additional outputs | --- |

| (Optional) LOG_PATH | Directory | Additional logs | Log files location |

- The design is for flexibility. It is strongly recommended that the pre-trained weights and the dataset should NOT be packed into the docker, so environment variables are necessary.

- The environment variables will be passed during "offline testing" and "online plan setting", so DON'T set the values at main.py or Dockerfile.

- "Offline testing" means this page "Step 8 - Test whether the docker image runs properly".

- "Online plan setting" means the last column "Aggregator Dashboard Setting Plans Inputs" which is in the page 5.1 Getting started "Step 4 - PI setting up a training plan".

- For errors debugging, writing files in LOG_PATH is a recommended method.

[INPUT_PATH]

Here are some examples

| Before | After |

| # import cv2 img = cv2.imread("./dataset/img1.jpg") |

# import cv2 img = cv2.imread(os.environ["INPUT_PATH"]+"/dataset/img1.jpg") |

| # import pandas as pd df = pd.read_csv("./data.csv") |

# import pandas as pd df = pd.read_csv(os.environ["INPUT_PATH"]+"/data.csv") |

[LOCAL_MODEL_PATH]

Insert the saving model command with LOCAL_MODEL_PATH after the preparation of the dictionary.

| Pytorch | Tensorflow Keras |

| weights={"state_dict":model.state_dict()} | weights = {"state_dict": {str(key): val for key,val in enumerate(model.get_weights())}} |

| torch.save(weights, os.environ["LOCAL_MODEL_PATH"]) | with open(os.environ["LOCAL_MODEL_PATH"], "wb") as F: pickle.dump(weights, F, protocol=pickle.HIGHEST_PROTOCOL) |

For the other framework user, please refer other examples in the following sections.

[GLOBAL_MODEL_PATH]

Load the merged weight from the aggregator. It should be placed before the preparation of the dictionary.

| if epoch != 0 or os.path.exists(os.environ["GLOBAL_MODEL_PATH"]): |

| Pytorch | Tensorflow Keras |

| model.load_state_dict( torch.load( os.environ["GLOBAL_MODEL_PATH"])["state_dict"]) |

with open(os.environ["GLOBAL_MODEL_PATH"], "rb") as F: weights = pickle.load(F) model.set_weights(list(weights["state_dict"].values())) |

Step 5 - Set events

Add the following events into your code at specified position as the Red Boxes in the above example image.

- SetEvent("TrainInitDone") - before the training loop.

- WaitEvent("TrainStarted") - in the training loop, in the beginning of an epoch.

- SaveInfoJson(output_dict) - in the training loop, after the preparation of the dictionary.

- SetEvent("TrainFinished") - in the training loop, at the end of an epoch.

- (Optional in most cases) SetEvent("ProcessFinished") - If "python main.py" can be run successfully but it raise error after the entire script while running on our platform, please add this line at the end of the script.

Prepare requirements.txt

- If you use Pytorch docker image as the based image, you DON'T need to install Pytorch again. So does the other frameworks.

- Just list the packages in lines. Remember to add FLaVor. They will be installed by pip in a docker container.

|

# package1 |

Prepare Dockerfile, then build it and test it

Step 6 - Prepare Dockerfile

Create a Dockerfile that can generate an image that:

- has packages in requirements.txt installed.

- has main.py, and can run the checking (check-fl in the next step) successfully.

- has the command that executes the code by flavor (flavor-fl)

Here is an example:

|

FROM pytorch/pytorch:1.12.0-cuda11.3-cudnn8-runtime # base image COPY main.py /app/main.py # copy files from host to the container WORKDIR /app # default login directory RUN pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118 # install packages RUN pip install https://github.com/ailabstw/flavor/archive/refs/heads/release/stable.zip -U # install FLaVor CMD flavor-fl -m "python main.py" # MUST |

Step 7 - Pack the code into a docker image

Generate an image based on the Dockerfile. Here is an example command.

| docker build -t foo:v0 . |

Step 8 - (Optional) Test whether the docker image runs properly

Run a container that mounts the dataset and the pretrained weights. For instance:

|

docker run -id --rm --gpus all --runtime=nvidia --shm-size=64g -v ./CoPI/dataset:/data -v ./weights/:/weights -w /app --name foo_test foo:v0 sleep inf & |

Login the container

| docker exec -it foo_test /bin/bash |

Verify the checking

| check-fl -m "python main.py" |

You will pass the environment variables at this step.

.png)

Finally, you can see "Run Successfully !!!" in the console if it's well done. Conversely, if any error occurs, please fix the code bugs then re-pack the code (the two final steps) again until it runs successfully.

.png)

If you can complete the steps in this page successfully, you can terminate this chapter and go to 5.1 getting started. Conversely, please refer the full code examples according to your code framework and its modifications in the following sections.